Установить приложение

Как установить приложение на iOS

Следуйте инструкциям в видео ниже, чтобы узнать, как установить наш сайт как веб-приложение на главный экран вашего устройства.

Примечание: this_feature_currently_requires_accessing_site_using_safari

Вы используете устаревший браузер. Этот и другие сайты могут отображаться в нем неправильно.

Необходимо обновить браузер или попробовать использовать другой.

Необходимо обновить браузер или попробовать использовать другой.

Решено чекпойнт 6700 завис

- Автор темы FlipFlop

- Дата начала

Fox

Участник

а версия GAIA какая ? По моему 6000 серия на 80.30 и 80.40 работает..Всем привет! Есть проблема с NGFW checkpoint 6700. Заключается она в том что чекпойнт работал работал и завис. Сначала отвалились vpn клиенты, затем доступ через SSH. Подскажите где смотреть системные логи чекпойнта ? Как понять почему он загнулся ?

FlipFlop

Случайный прохожий

Продолжаю разгребать тему.

Новый кейс - как с помощью WinSCP подключиться к checkpoint ? я хочу скопировать от туда все /var/log/messages

Когда пытаюсь соединиться через WinSCP такая ошибка

In Expert Mode

Note: this operation is not officially supported.

To resolve the problem, change the default shell to Expert for the user you wish to allow to SCP to the target Security gateway.

Once a user's shell is changed to /bin/bash he will automatically log in to that server in Expert mode and the expert command will no longer be needed. Check with your Security policy if that is acceptable.

Reverting back from /bin/bash to /etc/cli.sh shell (Gaia) or /bin/cpshell (SecurePlatform)

For Gaia

To revert to default settings, from the Expert mode run the following command:

[Expert:]# chsh -s /etc/cli.sh admin

You should see the following output returned:

Changing shell for admin.

Shell changed.

For SecurePlatform

To revert to default settings, from the Expert mode run the following command:

[Expert:]# chsh -s /bin/cpshell admin

You should see the following output returned:

Changing shell for admin.

Warning: "/bin/cpshell" is not listed in /etc/shells

Shell changed.

Новый кейс - как с помощью WinSCP подключиться к checkpoint ? я хочу скопировать от туда все /var/log/messages

Когда пытаюсь соединиться через WinSCP такая ошибка

Вот нашелНе могу различить сообщение приветствия. Вероятно, несовместимая версия командной оболочки (рекомендуется bash).

In Expert Mode

Note: this operation is not officially supported.

To resolve the problem, change the default shell to Expert for the user you wish to allow to SCP to the target Security gateway.

- To do so, enter the Expert mode and run:

[Expert:]# chsh -s /bin/bash admin (Where admin is the user you need to set the default shell for.)

- Once you hit 'Enter' the shell will be changed. Confirm the shell change with command:

grep admin /etc/passwd

- The last word in the output should be 'bash' (and not cpshell).

Once a user's shell is changed to /bin/bash he will automatically log in to that server in Expert mode and the expert command will no longer be needed. Check with your Security policy if that is acceptable.

Reverting back from /bin/bash to /etc/cli.sh shell (Gaia) or /bin/cpshell (SecurePlatform)

For Gaia

To revert to default settings, from the Expert mode run the following command:

[Expert:]# chsh -s /etc/cli.sh admin

You should see the following output returned:

Changing shell for admin.

Shell changed.

For SecurePlatform

To revert to default settings, from the Expert mode run the following command:

[Expert:]# chsh -s /bin/cpshell admin

You should see the following output returned:

Changing shell for admin.

Warning: "/bin/cpshell" is not listed in /etc/shells

Shell changed.

Последнее редактирование:

FlipFlop

Случайный прохожий

Начал просматривать лог на предмет ошибок, выписал подозрительные и непонятные

kernel: [fw4_8];FW-1: bpush: push block size error sz=0 at 0x32074

xpand[24596]: admin localhost t +installer:check_for_updates_last_res Last check for update is running

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

xpand[24596]: admin localhost t +installer:update_status -1

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

last message repeated 12 times

kernel: [fw4_8];[X.X.X.X:65290 -> Y.Y.Y.Y:443] [ERROR]: fw_up_get_application_opaque: Failed

Вот еще

CP6700 ctasd[30071]: Save SenderId lists finished

CP6700 xpand[24596]: admin localhost t +volatile:clish:admin:25866 t

CP6700 clish[25866]: User admin running clish -c with ReadWrite permission

CP6700 clish[25866]: cmd by admin: Start executing : show asset ... (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 clish[25866]: cmd by admin: Processing : show asset all (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 xpand[24596]: show_asset CDK: asset_get_proc started.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:25866

CP6700 clish[25866]: User admin finished running clish -c from CLI shell

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Start executing : show interfaces ... (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Processing : show interfaces all (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:28133

Dec 15 11:21:25 2020 CP6700 clish[28133]: User admin finished running clish -c from CLI shell

После вот этого начались зависания в чекпойнте

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched self-detected stall on CPU #началось после этого - осталось понять что это означает

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (t=1200006 jiffies g=88132389 c=88132388 q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: <IRQ> [<ffffffff810ca5f2>] sched_show_task+0xc2/0x130

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810ce0d9>] dump_cpu_task+0x39/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81136c61>] rcu_dump_cpu_stacks+0x91/0xd0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8113b727>] rcu_check_callbacks+0x477/0x780

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2750>] ? tick_sched_do_timer+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8109d456>] update_process_times+0x46/0x80

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f20b0>] tick_sched_handle+0x30/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2789>] tick_sched_timer+0x39/0x90

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb0f1>] __hrtimer_run_queues+0xf1/0x270

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb5cf>] hrtimer_interrupt+0xaf/0x1d0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81059838>] local_apic_timer_interrupt+0x38/0x60

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a3bcd>] smp_apic_timer_interrupt+0x3d/0x50

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a0302>] apic_timer_interrupt+0x162/0x170

Dec 15 11:25:31 2020 CP6700 kernel: <EOI> [<ffffffffab6879c0>] ? hash_find_hashent+0xb0/0xd0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaabc76d4>] ? ws_http2_conn_remove_closed_streams+0x114/0x480 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac085ff>] ? ws_connection2_read_handler+0x10ff/0x1190 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac038fc>] ? ws_connection_add_data_ex+0x17c/0x7a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1c181>] ? ws_mux_read_handler+0x1161/0x2050 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae52076>] ? mux_write_raw_data.part.48.lto_priv.6427+0x166/0x380 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab806c32>] ? fw_kmalloc_with_context_ex.part.13.lto_priv.2454+0x262/0x6d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1e0de>] ? ws_mux_read_handler_from_main+0xee/0x4b0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1dff0>] ? ws_mux_ioctl_handler+0x9a0/0x9a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae3316b>] ? mux_task_handler.lto_priv.2523+0x14b/0x670 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaadfe8a4>] ? mux_task_create_read.lto_priv.2433+0x24/0x170 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae5164a>] ? mux_read_handler+0xea/0x1f0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51d44>] ? mux_active_read_handler_cb+0x1a4/0x370 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff904e40f6>] ? bond_start_xmit+0x1b6/0x400 [bonding]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2c1e>] ? cpas_stream_iterate_cb.lto_priv.3222+0x2e/0x70 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab8466e8>] ? one_cookie_iterate.constprop.1478+0x78/0xa0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaccd3c4>] ? cpas_stream_iterate+0x94/0xc0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51ba0>] ? mux_passive_read_handler+0x450/0x450 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2bf0>] ? cpas_conn_established.lto_priv.6862+0x100/0x100 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab85d934>] ? cookie_free_ex.constprop.2234+0x94/0x230 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae7143f>] ? mux_active_read_handler+0x28f/0x320 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad03943>] ? cpas_mloop_impl.lto_priv.2807+0x4d3/0xc00 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0a420>] ? tcp_input+0x2760/0x2b30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0cbb0>] ? cpas_pkt_h_impl+0x60/0x2a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacf0bf3>] ? cpas_streamh_set_headers+0x63/0x1a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaa867739>] ? cphwd_cpasglue_handle_packet+0x249/0x3d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa45454>] ? fwmultik_process_entry+0x464/0x1810 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff816cb780>] ? ip_fragment.constprop.61+0xa0/0xa0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched detected stalls on CPUs/tasks:

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (detected by 11, t=1200013 jiffies, g=88132389, c=88132388, q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

kernel: [fw4_8];FW-1: bpush: push block size error sz=0 at 0x32074

xpand[24596]: admin localhost t +installer:check_for_updates_last_res Last check for update is running

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

xpand[24596]: admin localhost t +installer:update_status -1

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

last message repeated 12 times

kernel: [fw4_8];[X.X.X.X:65290 -> Y.Y.Y.Y:443] [ERROR]: fw_up_get_application_opaque: Failed

Вот еще

CP6700 ctasd[30071]: Save SenderId lists finished

CP6700 xpand[24596]: admin localhost t +volatile:clish:admin:25866 t

CP6700 clish[25866]: User admin running clish -c with ReadWrite permission

CP6700 clish[25866]: cmd by admin: Start executing : show asset ... (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 clish[25866]: cmd by admin: Processing : show asset all (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 xpand[24596]: show_asset CDK: asset_get_proc started.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:25866

CP6700 clish[25866]: User admin finished running clish -c from CLI shell

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Start executing : show interfaces ... (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Processing : show interfaces all (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:28133

Dec 15 11:21:25 2020 CP6700 clish[28133]: User admin finished running clish -c from CLI shell

После вот этого начались зависания в чекпойнте

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched self-detected stall on CPU #началось после этого - осталось понять что это означает

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (t=1200006 jiffies g=88132389 c=88132388 q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: <IRQ> [<ffffffff810ca5f2>] sched_show_task+0xc2/0x130

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810ce0d9>] dump_cpu_task+0x39/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81136c61>] rcu_dump_cpu_stacks+0x91/0xd0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8113b727>] rcu_check_callbacks+0x477/0x780

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2750>] ? tick_sched_do_timer+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8109d456>] update_process_times+0x46/0x80

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f20b0>] tick_sched_handle+0x30/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2789>] tick_sched_timer+0x39/0x90

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb0f1>] __hrtimer_run_queues+0xf1/0x270

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb5cf>] hrtimer_interrupt+0xaf/0x1d0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81059838>] local_apic_timer_interrupt+0x38/0x60

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a3bcd>] smp_apic_timer_interrupt+0x3d/0x50

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a0302>] apic_timer_interrupt+0x162/0x170

Dec 15 11:25:31 2020 CP6700 kernel: <EOI> [<ffffffffab6879c0>] ? hash_find_hashent+0xb0/0xd0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaabc76d4>] ? ws_http2_conn_remove_closed_streams+0x114/0x480 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac085ff>] ? ws_connection2_read_handler+0x10ff/0x1190 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac038fc>] ? ws_connection_add_data_ex+0x17c/0x7a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1c181>] ? ws_mux_read_handler+0x1161/0x2050 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae52076>] ? mux_write_raw_data.part.48.lto_priv.6427+0x166/0x380 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab806c32>] ? fw_kmalloc_with_context_ex.part.13.lto_priv.2454+0x262/0x6d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1e0de>] ? ws_mux_read_handler_from_main+0xee/0x4b0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1dff0>] ? ws_mux_ioctl_handler+0x9a0/0x9a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae3316b>] ? mux_task_handler.lto_priv.2523+0x14b/0x670 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaadfe8a4>] ? mux_task_create_read.lto_priv.2433+0x24/0x170 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae5164a>] ? mux_read_handler+0xea/0x1f0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51d44>] ? mux_active_read_handler_cb+0x1a4/0x370 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff904e40f6>] ? bond_start_xmit+0x1b6/0x400 [bonding]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2c1e>] ? cpas_stream_iterate_cb.lto_priv.3222+0x2e/0x70 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab8466e8>] ? one_cookie_iterate.constprop.1478+0x78/0xa0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaccd3c4>] ? cpas_stream_iterate+0x94/0xc0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51ba0>] ? mux_passive_read_handler+0x450/0x450 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2bf0>] ? cpas_conn_established.lto_priv.6862+0x100/0x100 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab85d934>] ? cookie_free_ex.constprop.2234+0x94/0x230 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae7143f>] ? mux_active_read_handler+0x28f/0x320 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad03943>] ? cpas_mloop_impl.lto_priv.2807+0x4d3/0xc00 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0a420>] ? tcp_input+0x2760/0x2b30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0cbb0>] ? cpas_pkt_h_impl+0x60/0x2a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacf0bf3>] ? cpas_streamh_set_headers+0x63/0x1a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaa867739>] ? cphwd_cpasglue_handle_packet+0x249/0x3d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa45454>] ? fwmultik_process_entry+0x464/0x1810 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff816cb780>] ? ip_fragment.constprop.61+0xa0/0xa0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched detected stalls on CPUs/tasks:

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (detected by 11, t=1200013 jiffies, g=88132389, c=88132388, q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Последнее редактирование:

FlipFlop

Случайный прохожий

Dec 15 11:40:31 2020 CP6700 kernel: INFO: rcu_sched self-detected stall on CPU

Dec 15 11:40:31 2020 CP6700 kernel: 1: (2099964 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:40:31 2020 CP6700 kernel: (t=2100016 jiffies g=88132389 c=88132388 q=0)

Dec 15 11:40:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:40:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:40:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:40:31 2020 CP6700 kernel: <IRQ> [<ffffffff810ca5f2>] sched_show_task+0xc2/0x130

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810ce0d9>] dump_cpu_task+0x39/0x70

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81136c61>] rcu_dump_cpu_stacks+0x91/0xd0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8113b727>] rcu_check_callbacks+0x477/0x780

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f2750>] ? tick_sched_do_timer+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8109d456>] update_process_times+0x46/0x80

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f20b0>] tick_sched_handle+0x30/0x70

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f2789>] tick_sched_timer+0x39/0x90

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810bb0f1>] __hrtimer_run_queues+0xf1/0x270

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810bb5cf>] hrtimer_interrupt+0xaf/0x1d0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81059838>] local_apic_timer_interrupt+0x38/0x60

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817a3bcd>] smp_apic_timer_interrupt+0x3d/0x50

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817a0302>] apic_timer_interrupt+0x162/0x170

Dec 15 11:40:31 2020 CP6700 kernel: <EOI> [<ffffffffab6879c0>] ? hash_find_hashent+0xb0/0xd0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaabc76d4>] ? ws_http2_conn_remove_closed_streams+0x114/0x480 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac085ff>] ? ws_connection2_read_handler+0x10ff/0x1190 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac038fc>] ? ws_connection_add_data_ex+0x17c/0x7a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1c181>] ? ws_mux_read_handler+0x1161/0x2050 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae52076>] ? mux_write_raw_data.part.48.lto_priv.6427+0x166/0x380 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab806c32>] ? fw_kmalloc_with_context_ex.part.13.lto_priv.2454+0x262/0x6d0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1e0de>] ? ws_mux_read_handler_from_main+0xee/0x4b0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1dff0>] ? ws_mux_ioctl_handler+0x9a0/0x9a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae3316b>] ? mux_task_handler.lto_priv.2523+0x14b/0x670 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaadfe8a4>] ? mux_task_create_read.lto_priv.2433+0x24/0x170 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae5164a>] ? mux_read_handler+0xea/0x1f0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaa6d86e3>] ? cphwd_send_packet+0x83/0x260 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae51d44>] ? mux_active_read_handler_cb+0x1a4/0x370 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacc2c1e>] ? cpas_stream_iterate_cb.lto_priv.3222+0x2e/0x70 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab8466e8>] ? one_cookie_iterate.constprop.1478+0x78/0xa0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaccd3c4>] ? cpas_stream_iterate+0x94/0xc0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae51ba0>] ? mux_passive_read_handler+0x450/0x450 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacc2bf0>] ? cpas_conn_established.lto_priv.6862+0x100/0x100 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae7143f>] ? mux_active_read_handler+0x28f/0x320 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad03943>] ? cpas_mloop_impl.lto_priv.2807+0x4d3/0xc00 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad0a1e6>] ? tcp_input+0x2526/0x2b30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81794018>] ? _raw_write_trylock+0x28/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad0cbb0>] ? cpas_pkt_h_impl+0x60/0x2a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacf0bf3>] ? cpas_streamh_set_headers+0x63/0x1a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaa867739>] ? cphwd_cpasglue_handle_packet+0x249/0x3d0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa45454>] ? fwmultik_process_entry+0x464/0x1810 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810c0c35>] ? __wake_up_common+0x55/0x90

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810c3c84>] ? __wake_up+0x44/0x50

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: INFO: rcu_sched detected stalls on CPUs/tasks:

Dec 15 11:40:31 2020 CP6700 kernel: 1: (2099964 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:40:31 2020 CP6700 kernel: (detected by 9, t=2100023 jiffies, g=88132389, c=88132388, q=0)

Dec 15 11:40:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:40:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:40:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:42:22 2020 CP6700 kernel: FW-1: stopping debug messages for the next 38 seconds

Dec 15 11:42:28 2020 CP6700 httpd2: HTTP login from X.X.X.X as admin #это уже я пытался зайти в чек и разобраться, но меня уже не пускало

Dec 15 11:42:28 2020 CP6700 xpand[24596]: admin localhost t +webuiparams:logincount:admin 16

Dec 15 11:42:28 2020 CP6700 xpand[24596]: Configuration changed from localhost by user admin

Dec 15 11:42:28 2020 CP6700 httpd2: Cannot get pid

Dec 15 11:42:28 2020 CP6700 httpd2: Session had expired for user: admin

Dec 15 11:42:28 2020 CP6700 httpd2: Cannot get pid

Dec 15 11:42:28 2020 CP6700 httpd2: Session had expired for user: admin

Dec 15 11:42:30 2020 CP6700 xpand[24596]: show_asset CDK: asset_get_proc started.

Dec 15 11:42:30 2020 CP6700 xpand[24596]: backup_live_get_proc: Unable to open status file

Dec 15 11:42:30 2020 CP6700 xpand[24596]: failed to read status file

Dec 15 11:40:31 2020 CP6700 kernel: 1: (2099964 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:40:31 2020 CP6700 kernel: (t=2100016 jiffies g=88132389 c=88132388 q=0)

Dec 15 11:40:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:40:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:40:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:40:31 2020 CP6700 kernel: <IRQ> [<ffffffff810ca5f2>] sched_show_task+0xc2/0x130

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810ce0d9>] dump_cpu_task+0x39/0x70

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81136c61>] rcu_dump_cpu_stacks+0x91/0xd0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8113b727>] rcu_check_callbacks+0x477/0x780

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f2750>] ? tick_sched_do_timer+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8109d456>] update_process_times+0x46/0x80

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f20b0>] tick_sched_handle+0x30/0x70

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810f2789>] tick_sched_timer+0x39/0x90

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810bb0f1>] __hrtimer_run_queues+0xf1/0x270

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810bb5cf>] hrtimer_interrupt+0xaf/0x1d0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81059838>] local_apic_timer_interrupt+0x38/0x60

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817a3bcd>] smp_apic_timer_interrupt+0x3d/0x50

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817a0302>] apic_timer_interrupt+0x162/0x170

Dec 15 11:40:31 2020 CP6700 kernel: <EOI> [<ffffffffab6879c0>] ? hash_find_hashent+0xb0/0xd0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaabc76d4>] ? ws_http2_conn_remove_closed_streams+0x114/0x480 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac085ff>] ? ws_connection2_read_handler+0x10ff/0x1190 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac038fc>] ? ws_connection_add_data_ex+0x17c/0x7a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1c181>] ? ws_mux_read_handler+0x1161/0x2050 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae52076>] ? mux_write_raw_data.part.48.lto_priv.6427+0x166/0x380 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab806c32>] ? fw_kmalloc_with_context_ex.part.13.lto_priv.2454+0x262/0x6d0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1e0de>] ? ws_mux_read_handler_from_main+0xee/0x4b0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaac1dff0>] ? ws_mux_ioctl_handler+0x9a0/0x9a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae3316b>] ? mux_task_handler.lto_priv.2523+0x14b/0x670 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaadfe8a4>] ? mux_task_create_read.lto_priv.2433+0x24/0x170 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae5164a>] ? mux_read_handler+0xea/0x1f0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaa6d86e3>] ? cphwd_send_packet+0x83/0x260 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae51d44>] ? mux_active_read_handler_cb+0x1a4/0x370 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacc2c1e>] ? cpas_stream_iterate_cb.lto_priv.3222+0x2e/0x70 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab8466e8>] ? one_cookie_iterate.constprop.1478+0x78/0xa0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaccd3c4>] ? cpas_stream_iterate+0x94/0xc0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae51ba0>] ? mux_passive_read_handler+0x450/0x450 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacc2bf0>] ? cpas_conn_established.lto_priv.6862+0x100/0x100 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaae7143f>] ? mux_active_read_handler+0x28f/0x320 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad03943>] ? cpas_mloop_impl.lto_priv.2807+0x4d3/0xc00 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad0a1e6>] ? tcp_input+0x2526/0x2b30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff81794018>] ? _raw_write_trylock+0x28/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaad0cbb0>] ? cpas_pkt_h_impl+0x60/0x2a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaacf0bf3>] ? cpas_streamh_set_headers+0x63/0x1a0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaa867739>] ? cphwd_cpasglue_handle_packet+0x249/0x3d0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa45454>] ? fwmultik_process_entry+0x464/0x1810 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810c0c35>] ? __wake_up_common+0x55/0x90

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810c3c84>] ? __wake_up+0x44/0x50

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: INFO: rcu_sched detected stalls on CPUs/tasks:

Dec 15 11:40:31 2020 CP6700 kernel: 1: (2099964 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:40:31 2020 CP6700 kernel: (detected by 9, t=2100023 jiffies, g=88132389, c=88132388, q=0)

Dec 15 11:40:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:40:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:40:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:40:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:42:22 2020 CP6700 kernel: FW-1: stopping debug messages for the next 38 seconds

Dec 15 11:42:28 2020 CP6700 httpd2: HTTP login from X.X.X.X as admin #это уже я пытался зайти в чек и разобраться, но меня уже не пускало

Dec 15 11:42:28 2020 CP6700 xpand[24596]: admin localhost t +webuiparams:logincount:admin 16

Dec 15 11:42:28 2020 CP6700 xpand[24596]: Configuration changed from localhost by user admin

Dec 15 11:42:28 2020 CP6700 httpd2: Cannot get pid

Dec 15 11:42:28 2020 CP6700 httpd2: Session had expired for user: admin

Dec 15 11:42:28 2020 CP6700 httpd2: Cannot get pid

Dec 15 11:42:28 2020 CP6700 httpd2: Session had expired for user: admin

Dec 15 11:42:30 2020 CP6700 xpand[24596]: show_asset CDK: asset_get_proc started.

Dec 15 11:42:30 2020 CP6700 xpand[24596]: backup_live_get_proc: Unable to open status file

Dec 15 11:42:30 2020 CP6700 xpand[24596]: failed to read status file

FlipFlop

Случайный прохожий

Далее много ошибок

Dec 15 11:43:04 2020 CP6700 kernel: FW-1: lost 96 debug messages

Далее идет перезагруз по питанию

Dec 15 11:51:47 2020 CP6700 shutdown[306]: shutting down for system halt

Dec 15 11:51:49 2020 CP6700 shutdown[339]: shutting down for system halt

Dec 15 11:54:18 2020 CP6700 syslogd 1.4.1: restart.

Dec 15 11:54:18 2020 CP6700 syslogd: local sendto: Network is unreachable

Dec 15 11:54:18 2020 CP6700 kernel: klogd 1.4.1, log source = /proc/kmsg started.

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0a] lapic_id[0x07] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0b] lapic_id[0x09] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0c] lapic_id[0x0b] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x01] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x02] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x03] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x04] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x05] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x06] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x07] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x08] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x09] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0a] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0b] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0c] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: IOAPIC (id[0x02] address[0xfec00000] gsi_base[0])

Dec 15 11:54:18 2020 CP6700 kernel: IOAPIC[0]: apic_id 2, version 32, address 0xfec00000, GSI 0-119

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 0 global_irq 2 dfl dfl)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 9 global_irq 9 high level)

Dec 15 11:54:18 2020 CP6700 kernel: Using ACPI (MADT) for SMP configuration information

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: HPET id: 0x8086a201 base: 0xfed00000

Dec 15 11:54:18 2020 CP6700 kernel: smpboot: Allowing 12 CPUs, 0 hotplug CPUs

Dec 15 11:54:18 2020 CP6700 kernel: e820: [mem 0xa0000000-0xdfffffff] available for PCI devices

Dec 15 11:43:04 2020 CP6700 kernel: FW-1: lost 96 debug messages

Далее идет перезагруз по питанию

Dec 15 11:51:47 2020 CP6700 shutdown[306]: shutting down for system halt

Dec 15 11:51:49 2020 CP6700 shutdown[339]: shutting down for system halt

Dec 15 11:54:18 2020 CP6700 syslogd 1.4.1: restart.

Dec 15 11:54:18 2020 CP6700 syslogd: local sendto: Network is unreachable

Dec 15 11:54:18 2020 CP6700 kernel: klogd 1.4.1, log source = /proc/kmsg started.

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0a] lapic_id[0x07] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0b] lapic_id[0x09] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC (acpi_id[0x0c] lapic_id[0x0b] enabled)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x01] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x02] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x03] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x04] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x05] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x06] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x07] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x08] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x09] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0a] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0b] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: LAPIC_NMI (acpi_id[0x0c] high edge lint[0x1])

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: IOAPIC (id[0x02] address[0xfec00000] gsi_base[0])

Dec 15 11:54:18 2020 CP6700 kernel: IOAPIC[0]: apic_id 2, version 32, address 0xfec00000, GSI 0-119

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 0 global_irq 2 dfl dfl)

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 9 global_irq 9 high level)

Dec 15 11:54:18 2020 CP6700 kernel: Using ACPI (MADT) for SMP configuration information

Dec 15 11:54:18 2020 CP6700 kernel: ACPI: HPET id: 0x8086a201 base: 0xfed00000

Dec 15 11:54:18 2020 CP6700 kernel: smpboot: Allowing 12 CPUs, 0 hotplug CPUs

Dec 15 11:54:18 2020 CP6700 kernel: e820: [mem 0xa0000000-0xdfffffff] available for PCI devices

FlipFlop

Случайный прохожий

Нашел вот эту статью

sk166454

И вот что у меня было перед падением чека

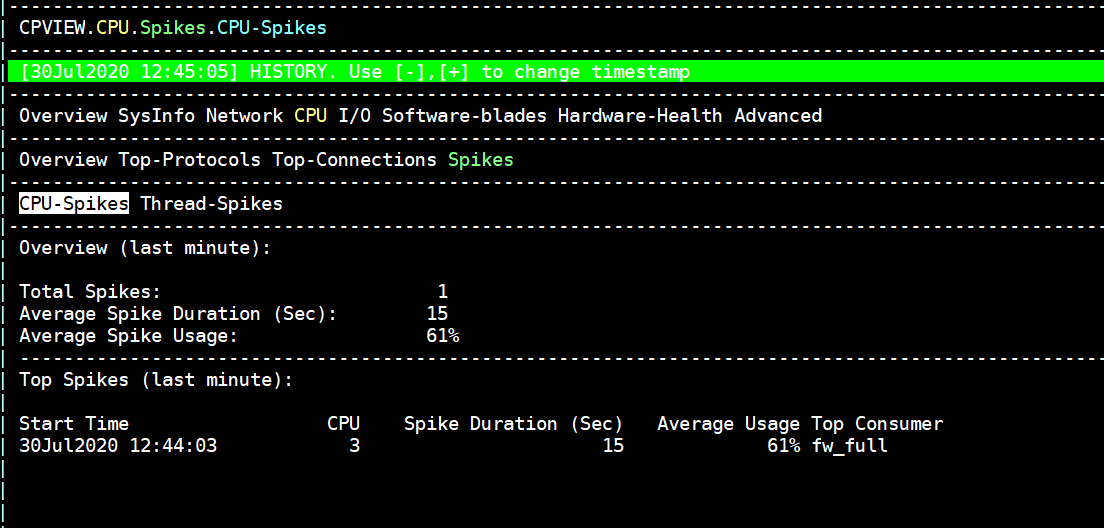

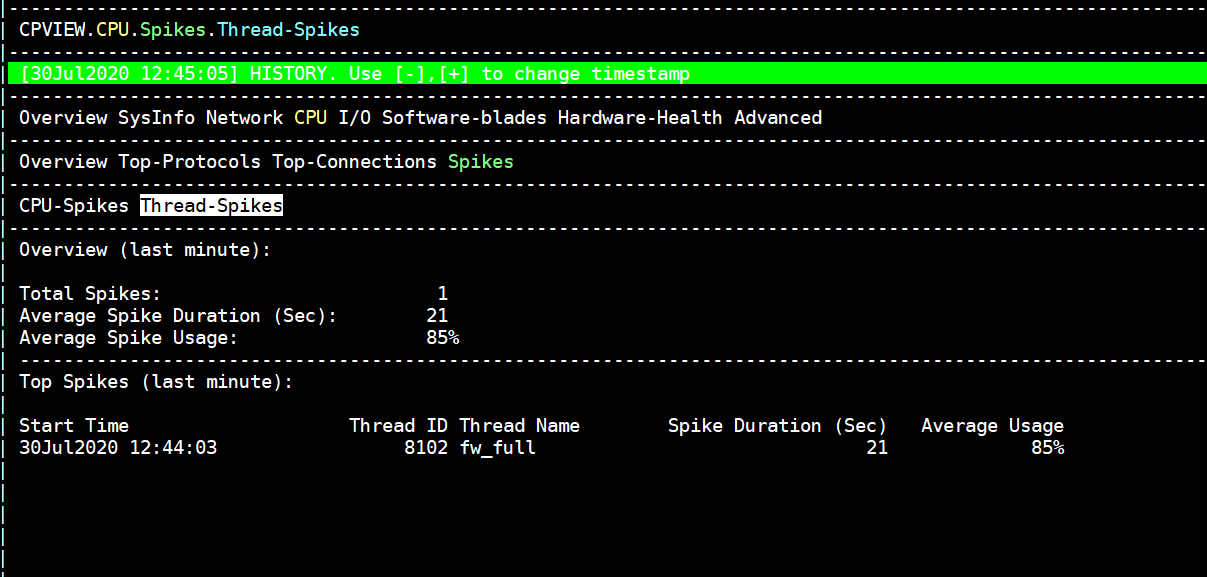

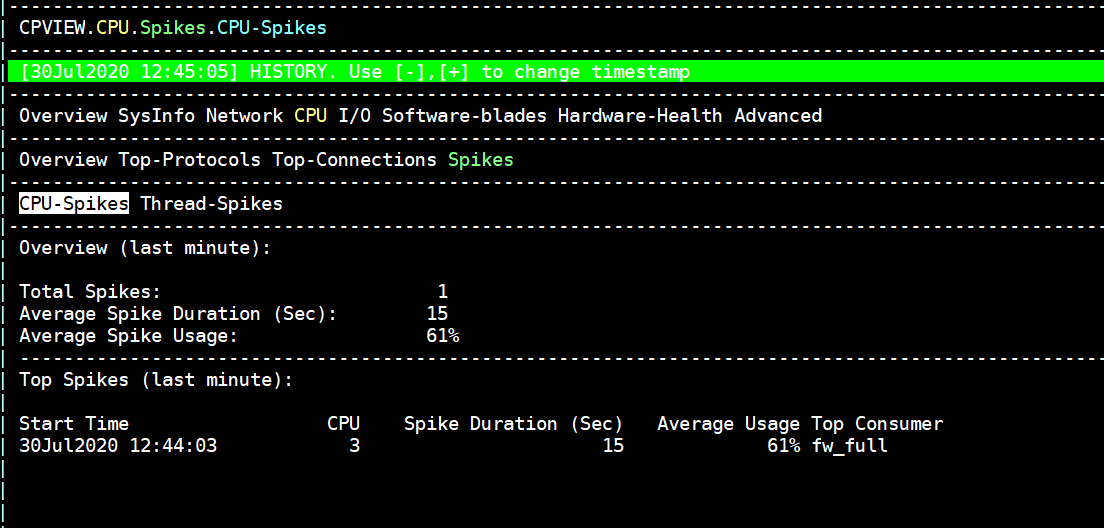

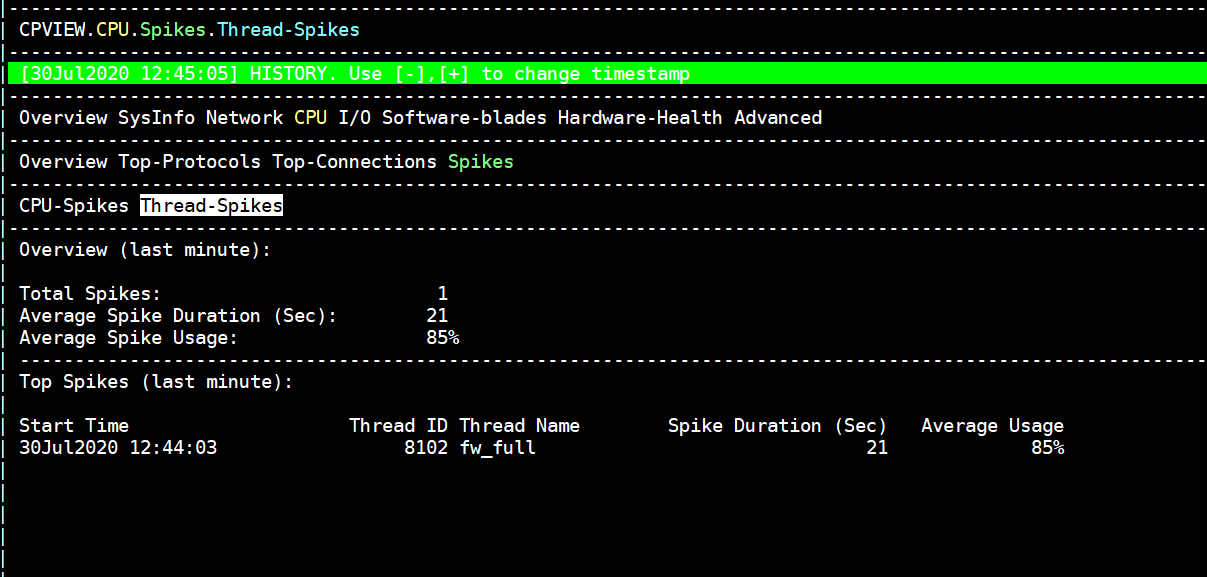

Spikes in CPView

Spike information can be reviewed in the CPView > CPU > Spikes.

Notes:

CPU Spikes:

Thread Spikes:

sk166454

cat /var/log/spike_detective/spike_detective.logИ вот что у меня было перед падением чека

Код:

Info: Spike, Spike Start Time: 15/12/20 11:12:07, Spike Type: CPU, Core: 2, Spike Duration (Sec): 15, Initial CPU Usage: 96, Average CPU Usage: 92, Perf Taken: 1

Info: Spike, Spike Start Time: 15/12/20 11:54:49, Spike Type: CPU, Core: 0, Spike Duration (Sec): 30, Initial CPU Usage: 100, Average CPU Usage: 88, Perf Taken: 0

Info: Spike, Spike Start Time: 15/12/20 11:55:24, Spike Type: CPU, Core: 0, Spike Duration (Sec): 5, Initial CPU Usage: 100, Average CPU Usage: 100, Perf Taken: 0Spikes in CPView

Spike information can be reviewed in the CPView > CPU > Spikes.

Notes:

- The information is similar to the CPU spike information in the log file

- For both CPView and CPView history, the information is gathered during the last minute.

- Sections:

- Overview (last minute)

- Summary of all CPU spikes during the last minute:

Total Spikes, Average Spike Duration, Average Usage

- Summary of all CPU spikes during the last minute:

- Top 5 spikes (last minute)

- For each CPU spike:

Start Time, CPU Core, Spike Duration, Average Usage, Top Consumer

- For each CPU spike:

- Overview (last minute)

CPU Spikes:

Thread Spikes:

Проставьте все обновления какие доступны через CPUSE

Vinny

Случайный прохожий

Dec 15 11:54:18 2020 CP6700 syslogd: local sendto: Network is unreachable

может линк какой то отвалился?

FlipFlop

Случайный прохожий

я почему то тоже так считаю. По крайней мере разбор логов наводит именно на эти мысли.Похоже что у вас был пик по нагрузке в результате чего линух склеил ласты

WishMaster

Участник

Судя по логу что-то подвесило все треды CPU, нужно мучать техподдержкуНачал просматривать лог на предмет ошибок, выписал подозрительные и непонятные

kernel: [fw4_8];FW-1: bpush: push block size error sz=0 at 0x32074

xpand[24596]: admin localhost t +installer:check_for_updates_last_res Last check for update is running

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

xpand[24596]: admin localhost t +installer:update_status -1

xpand[24596]: Configuration changed from localhost by user admin by the service dbset

last message repeated 12 times

kernel: [fw4_8];[X.X.X.X:65290 -> Y.Y.Y.Y:443] [ERROR]: fw_up_get_application_opaque: Failed

Вот еще

CP6700 ctasd[30071]: Save SenderId lists finished

CP6700 xpand[24596]: admin localhost t +volatile:clish:admin:25866 t

CP6700 clish[25866]: User admin running clish -c with ReadWrite permission

CP6700 clish[25866]: cmd by admin: Start executing : show asset ... (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 clish[25866]: cmd by admin: Processing : show asset all (cmd md5: 3bfc129ebe53256804b760d2033a05ca)

CP6700 xpand[24596]: show_asset CDK: asset_get_proc started.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: lom is installed.

CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:25866

CP6700 clish[25866]: User admin finished running clish -c from CLI shell

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Start executing : show interfaces ... (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 clish[28133]: cmd by admin: Processing : show interfaces all (cmd md5: 50efb6e261b20cb2200ce9fe0fa3a6d5)

Dec 15 11:21:25 2020 CP6700 xpand[24596]: admin localhost t -volatile:clish:admin:28133

Dec 15 11:21:25 2020 CP6700 clish[28133]: User admin finished running clish -c from CLI shell

После вот этого начались зависания в чекпойнте

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched self-detected stall on CPU #началось после этого - осталось понять что это означает

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (t=1200006 jiffies g=88132389 c=88132388 q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: <IRQ> [<ffffffff810ca5f2>] sched_show_task+0xc2/0x130

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810ce0d9>] dump_cpu_task+0x39/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81136c61>] rcu_dump_cpu_stacks+0x91/0xd0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8113b727>] rcu_check_callbacks+0x477/0x780

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2750>] ? tick_sched_do_timer+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8109d456>] update_process_times+0x46/0x80

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f20b0>] tick_sched_handle+0x30/0x70

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810f2789>] tick_sched_timer+0x39/0x90

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb0f1>] __hrtimer_run_queues+0xf1/0x270

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810bb5cf>] hrtimer_interrupt+0xaf/0x1d0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff81059838>] local_apic_timer_interrupt+0x38/0x60

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a3bcd>] smp_apic_timer_interrupt+0x3d/0x50

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817a0302>] apic_timer_interrupt+0x162/0x170

Dec 15 11:25:31 2020 CP6700 kernel: <EOI> [<ffffffffab6879c0>] ? hash_find_hashent+0xb0/0xd0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaabc76d4>] ? ws_http2_conn_remove_closed_streams+0x114/0x480 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac085ff>] ? ws_connection2_read_handler+0x10ff/0x1190 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac038fc>] ? ws_connection_add_data_ex+0x17c/0x7a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1c181>] ? ws_mux_read_handler+0x1161/0x2050 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae52076>] ? mux_write_raw_data.part.48.lto_priv.6427+0x166/0x380 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab806c32>] ? fw_kmalloc_with_context_ex.part.13.lto_priv.2454+0x262/0x6d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1e0de>] ? ws_mux_read_handler_from_main+0xee/0x4b0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaac1dff0>] ? ws_mux_ioctl_handler+0x9a0/0x9a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae3316b>] ? mux_task_handler.lto_priv.2523+0x14b/0x670 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaadfe8a4>] ? mux_task_create_read.lto_priv.2433+0x24/0x170 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae5164a>] ? mux_read_handler+0xea/0x1f0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51d44>] ? mux_active_read_handler_cb+0x1a4/0x370 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff904e40f6>] ? bond_start_xmit+0x1b6/0x400 [bonding]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2c1e>] ? cpas_stream_iterate_cb.lto_priv.3222+0x2e/0x70 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab8466e8>] ? one_cookie_iterate.constprop.1478+0x78/0xa0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaccd3c4>] ? cpas_stream_iterate+0x94/0xc0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae51ba0>] ? mux_passive_read_handler+0x450/0x450 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacc2bf0>] ? cpas_conn_established.lto_priv.6862+0x100/0x100 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab85d934>] ? cookie_free_ex.constprop.2234+0x94/0x230 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaae7143f>] ? mux_active_read_handler+0x28f/0x320 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad03943>] ? cpas_mloop_impl.lto_priv.2807+0x4d3/0xc00 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0a420>] ? tcp_input+0x2760/0x2b30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaad0cbb0>] ? cpas_pkt_h_impl+0x60/0x2a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaacf0bf3>] ? cpas_streamh_set_headers+0x63/0x1a0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaa867739>] ? cphwd_cpasglue_handle_packet+0x249/0x3d0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa45454>] ? fwmultik_process_entry+0x464/0x1810 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff816cb780>] ? ip_fragment.constprop.61+0xa0/0xa0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: INFO: rcu_sched detected stalls on CPUs/tasks:

Dec 15 11:25:31 2020 CP6700 kernel: 1: (1199960 ticks this GP) idle=5b3/140000000000001/0 softirq=302541128/302541128

Dec 15 11:25:31 2020 CP6700 kernel: (detected by 11, t=1200013 jiffies, g=88132389, c=88132388, q=0)

Dec 15 11:25:31 2020 CP6700 kernel: Task dump for CPU 1:

Dec 15 11:25:31 2020 CP6700 kernel: fw_worker_9 R running task 4760 21162 2 0x00000008

Dec 15 11:25:31 2020 CP6700 kernel: Call Trace:

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff817940ce>] ? _raw_spin_unlock_bh+0x1e/0x20

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e1bc>] ? cptim_tick_if_needed+0x8c/0x420 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46a80>] ? fwmultik_process_entry_unlocked+0x280/0x280 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffaaa46b1d>] ? fwmultik_queue_async_dequeue_cb+0x9d/0x2c0 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab80e8fe>] ? kernel_thread_run+0x3ae/0xf90 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810d309e>] ? dequeue_task_fair+0x3de/0x6c0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b7eb0>] ? wake_up_atomic_t+0x30/0x30

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c5370>] ? cplock_lock_term+0x10/0x10 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c94fe>] ? kiss_kthread_run+0x1e/0x50 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffffab7c538b>] ? plat_run_thread+0x1b/0x30 [fw_9]

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6f42>] ? kthread+0xe2/0xf0

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff8179f15d>] ? ret_from_fork_nospec_begin+0x7/0x21

Dec 15 11:25:31 2020 CP6700 kernel: [<ffffffff810b6e60>] ? insert_kthread_work+0x40/0x40

Попробуйте команду

fw ctl print_heavy_conn

FlipFlop

Случайный прохожий

Все отправил им ответили вот чтоСудя по логу что-то подвесило все треды CPU, нужно мучать техподдержку

Ну и собственно эта же команда. Теперь придется ждать следующего случаяAt the same time in CPview history I can observe the CPU was 100% utilized. Considering that CPU 1 is a firewall worker that's doing traffic inspection, I believe that at certain times there is a heavy connection ( elephant flow) that utilized the CPU and cause it to hang.

fw ctl print_heavy_conn

Поделиться: